SPADES - Total Operational Weather Readiness - Satellites (TOWR-S)

The Satellite Product Analysis and Distribution Enterprise System (SPADES) is a software application that transforms GOES satellite space weather data into products, primarily for the NWS Space Weather Prediction Center (SWPC).

SPADES Algorithms

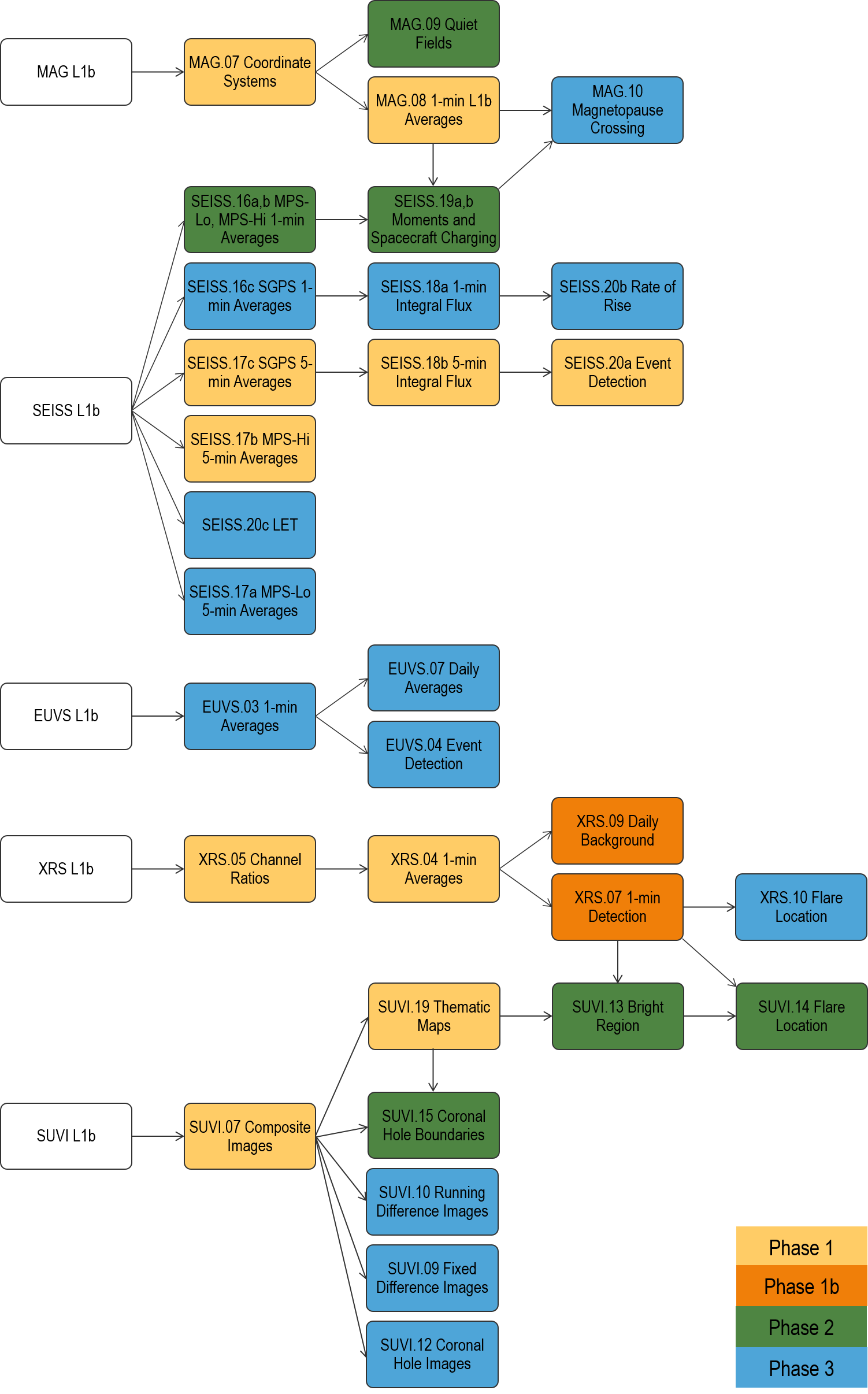

The main body of the SPADES system consists of 34 algorithms, each with unique output products.

Product Name |

GOES-16 |

GOES-18 |

|---|---|---|

Phase I (Continuity of Operations) |

||

| MAG.07: Various Coordinate Systems (from L1b) | Operational | Operational |

| MAG.08: Mag 1-min Averages (from MAG.07) | Operational | Operational |

| SEISS.17b (MPSH): 5-min Averages (from L1b) | Operational | Operational |

| SEISS.17c (SGPS): 5-min Averages (from L1b) | Operational | Operational |

| SEISS.18b (SGPS): 5-min Integral Flux (fr. SEISS.17c) | Operational | Operational |

| SEISS.20a (SGPS): Event Detection (from SEISS.18b) | Operational | Operational |

| XRS.04: 1-min Averages for EXIS XRS (from L1b) | Operational | Operational |

| XRS.05: Channel Ratio (from L1b) | Operational | Operational |

| SUVI.07: Composite Images (from L1b) | Operational | Operational |

| SUVI.19: Thematic Maps (from SUVI.07) | Operational | Operational |

| XRS.07a: 1-min Event Detection (from XRS.04) | Operational | Operational |

| XRS.07b: Post Event Summary (from XRS.04) | Operational | Operational |

| XRS.09: Daily Background (from XRS.04) | Operational | Operational |

Phase II |

||

| MAG.09: Quiet Fields (from MAG.07) | Operational | Operational |

| SEISS.16a: 1-min Averages – SEISS MPSL (from L1b) | Operational | Operational |

| SEISS.16b: 1-min Averages – SEISS MPSH (from L1b) | Operational | Operational |

| SEISS.19a: Moments (from MPSL SEISS.16a) | Operational | Operational |

| SEISS.19b: Moments (from MPSH SEISS.16b) | Operational | Operational |

| XRS.10: Flare Location (from XRS.07) | Operational | Operational |

| SUVI.13: Bright Regions (from SUVI.19 & XRS.07) | Operational | Operational |

| SUVI.14: Flare Location (from SUVI.13 & XRS.07) | Operational | Operational |

| SUVI.15: Coronal Hole Bndr (SUVI.19 & SUVI.07) | Operational | Operational |

Phase III |

||

| MAG.10: Magnetopause Crossing (from MAG.08) | Operational | Operational |

| SEISS.17a: 5-min Averages – SEISS MPSL (from L1b) | Operational | Operational |

| SEISS.16c: 1-min Averages – SEISS SGPS (from L1b) | Operational | Operational |

| SEISS.18a: 1-min Integral Flux (from SGPS SEISS.16c) | Operational | Operational |

| SEISS.20b: Rate of Rise (from SGPS SEISS.18a) | Operational | Operational |

| SEISS.20c: Linear Energy Transfer (from EHIS L1b) | Operational | Operational |

| SUVI.10: Running Diff. Images (from SUVI.07) | Operational | Operational |

| SUVI.12: Coronal Hole Images (from SUVI.07) | Operational | Operational |

| SUVI.09: Fixed Diff. Images (from SUVI.07) | Operational | Operational |

| EUVS.03: 1-min averages for EXIS/EUVS (from L1b) | Operational | Operational |

| EUVS.04a-e: Event Detection (from EUVS.03) | Operational | Operational |

| EUVS.07: Daily Averages (from EUVS.03) | Operational | Operational |

SPADES takes, as its primary inputs, GOES-R Series space weather Level 1b data from the EXIS, MAG, SEISS and SUVI instruments. The content and format of these input datasets are described in the GOES-R Product User Guide (Volume 3). For NWS SPADES processing, the primary sources of these GOES-R Series input datasets are the NWS GRB antenna systems. A secondary (alternate) source of input data will be the NESDIS PDA system, although that source has not yet been used to feed the NWS instances of SPADES. A set of algorithm theoretical basis documents (ATBDs, available through the GOES-R Program) exists for the SPADES Level 2 (L2) algorithms. These descriptions were developed by NCEI during the initial development of the L2 algorithm software. Each SPADES algorithm produces one or more L2 output products whose main consumers are SWPC, other government entities, industry partners, and public users. Some SPADES algorithms produce output used by subsequent SPADES algorithms as input. NCEI maintains the L2 product archive which contains data-of-record and is made publicly available.

The SPADES algorithms are categorized into three groups: Phases I, II and III, with the thirteen Phase I algorithms providing the critical “continuity of operations” (CONOPS) between GOES-NOP and GOES-R satellite series. The Phase I algorithms are deployed into NWS operations, the Phase II and III algorithms are still in developmental testing. Each algorithm is executed per satellite and data source. For example, SPADES currently produces two complete (and separate) sets of images: one set derived from GOES-16 SUVI data, and the other derived from GOES-18 SUVI data. Additional sets will be generated for each satellite from PDA L1b data once the feed is fully implemented.

SPADES is primarily a Python application. The current version of SPADES running in NWS operations is implemented in Python version 3.10, while the NCEI algorithm code runs Python version 3.9. A small percentage of the SPADES algorithms are implemented as Python wrappers around legacy C++ code. In general, the NCEI-provided SPADES algorithm code ports to the NWS operating environment with little or no refactoring.

NCEI vs NWS

The NCEI and NWS versions of SPADES are not identical. The three main factors that led to their differences are:

1. The NCEI version of SPADES was partly developed before NWS/IDP application onboarding standards (including allowed and prohibited packages) existed. In their development of SPADES, NCEI chose packages such as Apache Storm and Zookeeper (which provide coordination of distributed processes). In the NWS version of SPADES, the Storm and Zookeeper functions were replaced with RabbitMQ and NWS custom Python code.

2. The NCEI version of SPADES receives its L1b data from a direct push from PDA and polls its source directories when executing an L2 algorithm. The NWS version of SPADES receives its L1b via two separate interfaces: the NWS GRB antennas via LDM (primary), and PDA via a direct push (backup). Furthermore, the NWS SPADES processes new files as they are made available, which lowers the overall end-to-end latency of generated L2 products.

3. The IDP operational environment has configuration, logging and monitoring requirements that did not exist when the NCEI version of SPADES was first developed. The IDP requirements were implemented only in the NWS version of SPADES, as it was unnecessary for NCEI to include them.

The primary differences between the NCEI and NWS versions of SPADES are in the control segment or auxiliary components, not the algorithms. It is a project objective to converge the NCEI and NWS versions of SPADES, or at least curtail further divergences between the two versions. As long as the two versions differ, a certain amount of work will continue to be needed to rehost NCEI code deliveries to the NWS operating environments.

RabbitMQ

The NWS version of SPADES uses a RabbitMQ message broker to drive processing. Messaging is used for many purposes, such as notifying the arrival of Level L1b input files for processing. SPADES also uses JavaScript Object Notation (JSON) for purposes such as formatting character strings sent through RabbitMQ. SPADES runs in a virtualized environment. For the operational instances of SPADES on IDP, there is a set of interoperating virtual machines (VMs), where each VM serves a specific function, e.g. algorithm execution, LDM, administration, RabbitMQ, etc. Algorithm processing is distributed across the product/algorithm VMs for load balancing and ensuring the timely delivery of products to SWPC.

Control Segment

The SPADES software component which orchestrates the execution of the algorithms is referred to as the “control segment.” The control segment relies upon a combination of configurable business logic (CBL) files and dynamic conditions to trigger and modulate the execution of algorithms. As illustrated below, some SPADES algorithms (e.g. MAG.07) are executed after the arrival of only GOES Level 1b data, whereas other algorithms (e.g. MAG.08) utilize the outputs of other upstream algorithms as inputs. All algorithms provide new output products to the outbound LDM, and if necessary, other downstream algorithms. However, some algorithms only create products given certain criteria, such as during a solar flare.

The control segment implements the algorithm orchestration using various files and monitoring RabbitMQ message queues. CBLs are used along with incoming data streams of. Level 1b and/or Level 2 files to create relatively transient run-level configuration (RLC) files which prescribe and delegate algorithm execution using the current data stream. The control segment augments and completes an RLC instance based on incoming data and messages received from various daemon processes. Time-series data arrives in a consistent cadence per product thus the number and arrival time of the input files is relatively predictable. Other algorithms (e.g. SUVI imagery) consist of discontinuous segments of input data. Some algorithms require the availability of data from multiple sources and the application verifies that sufficient input data has been collected prior to initiating the algorithm’s execution, though it will proceed with an incomplete dataset if input latency exceeds the timeout threshold. The control segment determines which algorithm(s) can run based on the current conditions, creates the necessary algorithm environment(s), and executes the appropriate algorithm(s). RLCs also specify the directories wherein the algorithm should run and the RLC should be archived. The control segment manages RLCs by checking for expiration, storing them in JSON, and archiving them. It also provides SPADES processes with identifying information so that developers and the system itself can ascertain what a process is and what it does, and so that the processes can be uniquely associated with logging messages. RLC files are saved for a period of time to facilitate after-incident response and troubleshooting.

The control segment performs other functions, such as keeping track of the locations of the necessary SPADES components on the filesystem. The SPADES control segment and utility software handle the management of log and archive files, including creating manifests to keep track of archive files and performing integrity checks via file size and checksum tracking. The control segment and infrastructure software ensure a systematic, predictable approach to process execution and management. The control segment also includes Python code that distinguishes between satellites and environments (e.g. development versus IDP-PROD-Boulder). SPADES includes Python scripts for RabbitMQ queue configuration, status/diagnostics and management.

Execution

SPADES uses configuration files and lookup tables (LUTs) needed for proper SPADES execution. Some LUTs are updated in sync with code updates, but other LUT updates can take place out of sync with other code updates. Some LUTs contain spacecraft and/or instrument settings obtained from the GOES-R Program and used throughout the GOES-R Ground Segment. Updates of these GOES-R lookup tables, in the SPADES environment, must sometimes be synchronized with changes to the GOES-R data stream. Moreover, the SPADES system consists of ancillary utility software for analyses and maintenance, such as for latency analysis and system initialization.

While most SPADES execution is data/event driven, some elements are schedule driven and thus rely upon the cron utility. The cron-driven elements include input/output file archiving, old file cleanup (e.g. log file rollover and deletion, and product storage management), SPADES system health checks, and other routine system maintenance activities.

Logging and Monitoring

The NWS instances of SPADES include logging and monitoring functionality. SPADES performs Python/Pika event logging. Scripts handle tasks such as log file creation, naming, rotation, and purging logs older than a configurable number of days. SPADES includes time-stamped application logs. The tar and gzip utilities are used for compression, storage, and decompression of archived files and logs. The NWS instances of SPADES follow NWS/NCO logging standards that pertain to log format, content, configurable logging levels (i.e. more or less verbose logging), and use of logging keywords such as INFO and DEBUG messages to aid in after-incident issue diagnosis. The SPADES logs also enable proper monitoring by NCO.

SPADES includes Python scripts for archiving Level 1b input data, logs, and Level 2 output products (the latter in support of the NCEI archive). The archiver for the Level 2 products tars and gzips the product files. Each tar/gzip file corresponds to one Level 2 product (e.g. one tar/gzip file for EXIS/XRS Flare Detection, another for SEISS/MPSH one-minute Average Fluxes, another for SUVI Composite Images of the 131 Angstrom band, and so on). The archive files are retrieved by the NCEI archive system in Asheville, NC on an hourly basis.

Utility Scripts: Command-Line Interface

SPADES includes a sizable set of utility scripts, some of which are used in the operational setting. The others are for development, testing, troubleshooting, and transitioning to operations. The SPADES command-line interface enables operations such as starting and stopping SPADES processes (including SPADES-supporting processes), and listing information about SPADES processes. The command-line interface enables manual initiation of specific tasks that might be automated during normal operations. This includes monitoring for incoming data, executing one or more specific SPADES algorithms on demand, or manually pushing algorithm output products onto the LDM output queue. This interface also enables specification of a host for remote command execution, supports different levels of logging, and can display help and debugging information.

Utility Scripts: Functionality

The utility scripts also enable manual/targeted testing of specific elements of the SPADES functionality, such as messaging or testing of specific algorithms. SPADES includes functionality for deployment activities such as environment setup, “sourcing” environment parameters, establishing correct paths, creating directories, importing the conda packages, positioning SPADES configuration files, and other sundry deployment-related details. SPADES includes functionality for maintaining a configurable number of days of input and output files for reprocessing or to aid in after-incident troubleshooting and scenario recreation. Python scripts within SPADES can be used for managing/executing LDM (starting, stopping, checking/resetting queues, and otherwise orchestrating LDM to run correctly for SPADES).

Utility Scripts: Latency

There are SPADES utility scripts to compute and report on end-to-end product latency, using file names, execution time stamps, log information and JSON files. These utility scripts compute and report product latencies from the time of the observation aboard the spacecraft, through SPADES execution, through Level 2 product generation, through product handoff to SWPC. The scripts take into account latencies that are permitted and expected upstream (such as the GOES-R Program's product-specific vendor-allocated ground latencies and normal upstream product-aggregation times). These scripts for monitoring product latencies are vital, as many of the GOES/SPADES space weather products are time-critical to SWPC, their partners, and other consumers of SWPC products.

Utility Scripts: Environment Management

SPADES also includes utility scripts for file management, setup and cleanup of the operational environment, and for confirming an operational environment is ready for SPADES executions. SPADES utility scripts keep the operating environment clean, such as by removing extraneous background/daemon processes that may have terminated abnormally.

Miniconda

SPADES relies upon a number of open-source Python packages, and Miniconda is used to aid in package management. Miniconda is baselined to pull in the necessary packages from the appropriate sources, and also helps ensure compatibility and consistency among the various Python packages. The SPADES documentation describes the steps to take to deploy Miniconda.

Documentation

The existing SPADES documentation is stored on VLab, using Redmine and Jenkins. The latest versions of the NWS versions of the SPADES software are on the VLab in a git repository. There is SPADES documentation in Jenkins that describes the steps needed to checkout a version of SPADES from the repository (and documentation for updating the repository with a new version of SPADES).

Data Formats

For the primary input and output data associated with SPADES, there are two data formats: Flexible Image Transport System (FITS) and Network Common Data Form (netCDF4). SPADES expects SUVI Level 1b input data to be in FITS format, and it produces SUVI Level 2 output products in FITS. The format for all other products (i.e. all EXIS, Magnetometer and SEISS Level 1b input data and Level 2 output products) is netCDF4.

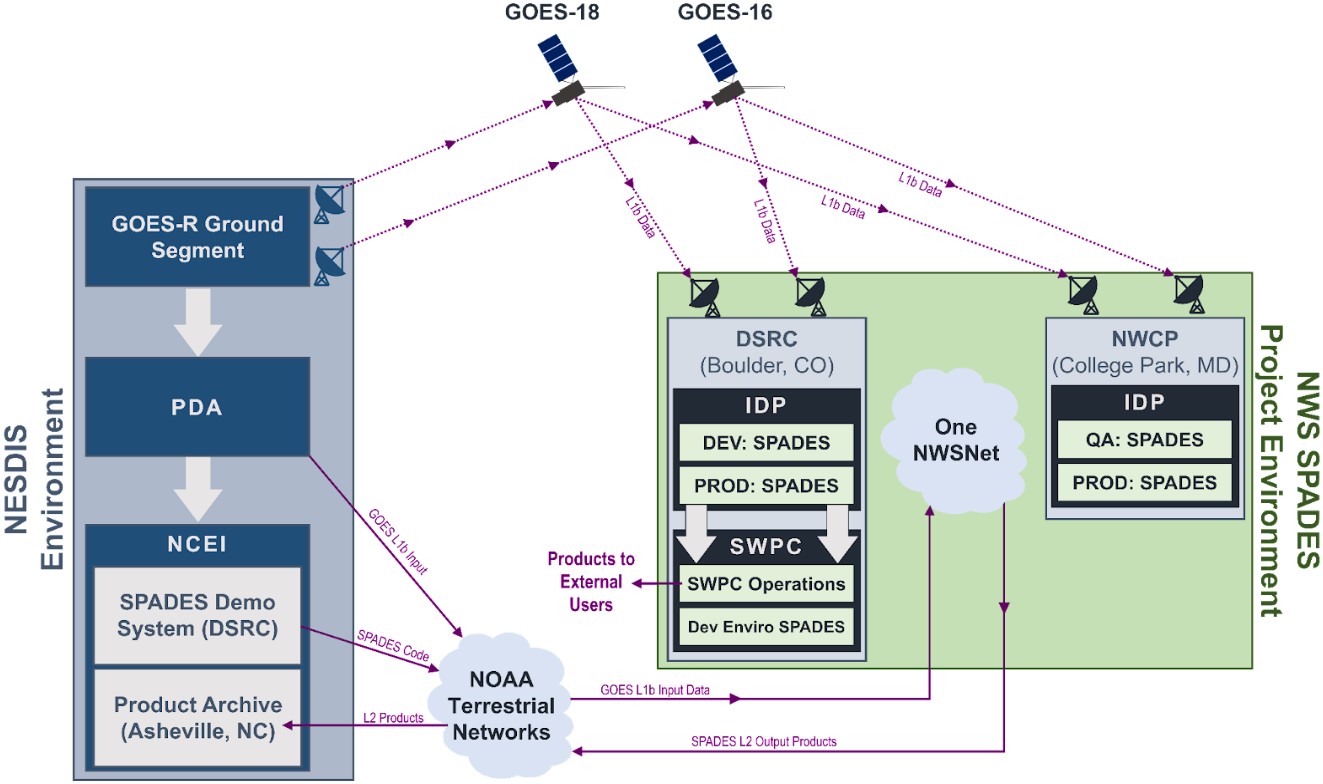

Concept of Operations

As shown below in the SPADES Concept of Operations, the GOES-16 and -18 Level 1b space weather datasets are available from either the NESDIS PDA system or the GRB service. The NCEI demonstration version of SPADES uses the PDA as the data source whereas the NWS versions of SPADES primarily use the GRB service at this time. The Level 1b datasets are transferred from the NWS GRB antenna systems to the NWS SPADES systems via LDM over terrestrial networks. NWS maintains two SPADES development environments: the SWPC development environment and the IDP DEV environment, both located at the David Skaggs Research Center (DSRC) in Boulder, CO. The operational instances of SPADES run on IDP PROD at DSRC and NCWCP. At any given time, one of the two IDP PROD sites is responsible for sending Level 2 products to SWPC and the other serves as a hot standby except during routine maintenance or upgrades, etc.

Given its proximity to SWPC, the Boulder IDP is usually primary for the SPADES application. SWPC systems and forecasters use the GOES-R Series Level 2 products in mission operations to prepare a range of critical space weather products for their users. The hourly Level 2 archive products produced by SPADES are sent to NCEI in Asheville, NC via NOAA terrestrial networks.